THE WAY OF CAMERON

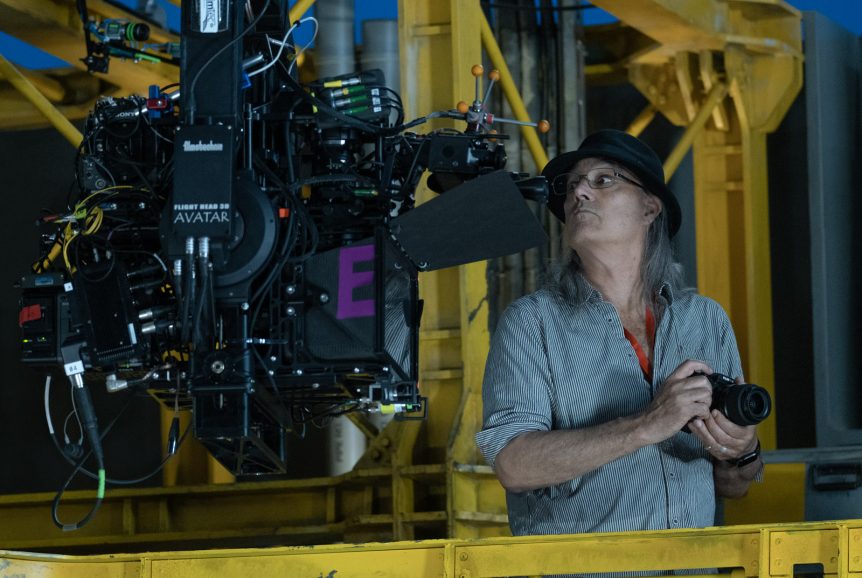

Over a decade since the world first encountered the blue-skinned Na’vi tribe, James Cameron’s ground-breaking futuristic universe returns for Avatar: The Way of Water. Long-time Cameron collaborator Russell Carpenter ASC took on the titanic task of lensing the much-awaited sequel, a triumph of filmmaking technology.

Whether it be capturing flying Harrier jets in True Lies or the sinking replica of an infamous luxury passenger ship in Titanic, Russell Carpenter ASC is no stranger to the epic storytelling ambitions of James Cameron which are always pushing the boundaries of creative and technical achievement. The duo reunited for Avatar: The Way of Water and the yet to be named Avatar 3 where Jake Sully (Sam Worthington) and Neytiri (Zoe Saldaña) flee their forest dwelling from the invasive Research Development Administration and seek refuge for their mixed family of Na’vi and human children with the coastal Metkayina clan on Pandora. As much as the first of four proposed sequels is a new adventure on the exoplanetary moon that orbits the gas giant Polyphemus after a 13-year absence, it represents a new chapter for the cinematographer who made his feature debut in 1984.

“This was cutting-edge motion picture technology and I wanted to experience another Jim Cameron ‘challenge’!” laughs Carpenter. “Then after two-something years of working on it, I think, ‘Now, I really want to do a much less complex movie.’ Basically, when you come onto something like this as a cinematographer you learn as much as you can from the production designers, VFX people and other artists who have been developing the look of Avatar: The Way of Water and who have been working on the picture for years.”

The film is constructed in many layers. “After you get the script, worlds have to be designed – not just Pandora but also the man-made environment of the RDA,” says Carpenter. “You start to inhabit that. Jim did virtual location scouts in which he could make significant changes to a location if details needed to be changed. Once Jim gets the layout right, he starts to introduce members of his ‘troupe’ who are talented performers or athletes or mimes in performance capture suits who Jim relies upon to block out his scenes. Once Jim blocks the scene out, he goes into editing that and gets a sense of whether he likes that. Afterwards it gets to a finer level where Jim finally brings his actors in and performances are then captured with the actors in performance capture suits and tiny cameras that record their subtle facial movements. More editing is done, and then Jim virtually captures all his chosen angles for a scene, even though the actors themselves are no longer present.”

HUMAN CONNECTION

One of the technical marvels is the ability to capture the emotional nuances of the human face. “They did a lot of studying of the movement of the human face, not only at its surface but on the layers below. All these subtle emotional movements in the human actors are now represented in their Na’vi characters,” states Carpenter. “Not only what is happening on the surface but all of what we call the subconscious cues of what is happening with the muscle structure under the skin.”

Carpenter had to learn how to virtually light scenes. “This early period allowed me to observe work of the two production designers. Ben Procter did the manmade aspect of what was happening on Pandora and Dylan Cole was tasked with creating a Pandora which is much more refined [from what was portrayed in Avatar]. Everything that you see is based on something real. Even the clothes were designed and made so that the CG artists could have real texture, and colour to work from. You would have people walking back and forth wearing these clothes to see how the wind affected them. There are more types of fauna and animals on the planet and a lot of them live underwater and those had to be created. They were based on something that you as viewer could relate to, whether it was a porpoise or whale or jellyfish, so you don’t have things moving around in a way that feels computer generated.”

Blue skin changes colours under different lighting conditions. “In cooler lights or daylight, blue skin looks damn good,” notes Carpenter. “There are a lot of firelight scenes in this movie and when the orange light hits the blue skin it goes grey. Grey was probably the last colour we wanted to see. Jim is a fan of bringing nuance and varied colour into the scenes. Even white light has cool and warm nuances. Some of the concept art for Avatar: The Way of Water reminded me of the Hudson River School of the mid-19th century which is very beautiful.”

Besides lighting, when it came to the actual “live-action” the camera movement had to precisely represent what Cameron was doing with the virtual camera. “Instead of moving two feet Jim can say, ‘Give me a 10:1 ratio,’ and now his virtual camera is moving 20 feet for every two feet Jim moves on stage. He may want to make a change in midstream and in the computer world it is simple to move a waterfall this way and that in fairly short order. My gaffer Len Levine [chief lighting technician] observed this process and said, ‘Okay, if Jim is working like that, I can extend the system to our motion picture stage.’ Len designed a system that was like moving lights on trusses that could be this way or that. They basically circled the action and then that was mixed with a soft light coming from the top.”

VIRTUAL AND PRACTICAL

In ‘live-action’ filming, an indispensable tool for visually combining the virtual and practical elements together for framing and composition was the Simulcam. “There is this point where you go in, see the cut scene and there’s tonnes of detail in that,” remarks Carpenter. “Simulcam is a computer system that marries the virtual imagery to the action in front of our live action camera. This was refined over the years to the point where depth sensors placed on the camera could ascertain exactly where our live actors were in the virtual environment and then could generate extremely accurate composite imagery in real time. Not only could you have the Na’vis walk around the actors but also in front of them. In terms of knowing precisely the way our lighting is supposed to be, Simulcam was a game-changer.”

Above-water sequences were lit with LED panels. “We had these big LED panels with all the fire effects going on them and that would be reflected on the water,” reveals Carpenter. “But that was easier said than done because the panels actually had to come down and meet the water which would be an electronic disaster. The solution was to put the LED panels over the water and have a huge set of mirrors that go down under the water.”

Underwater shooting was also problematic. “I did Titanic, and you would think I would remember how long things take to light underwater. We used Astera tubes which run on batteries and can be controlled from a lighting board so depending on what type of water we would just put these things underwater and get a sense of the light. That helped us a lot. We wound up bringing in people from Australia who had experience working underwater on Australian films to supplement the crew that we had.”

The tulkun hunt sequence was shot by New Zealand cinematographer Richard Bluck NZCS. “There was a huge stage with the tank that was only about five feet deep,” reveals Carpenter. “The gimbal was amazing. The invading humans piloted nimble whaling boats that could go through the rough seas and fly up in the air, hit the waves hard, and turn this and that way. We could shoot handheld on these boats but a lot of it was also done extending the Scorpio crane into the tank and it gave us a lot of flexibility to move around the boats. We shot bluescreen in the background. I figured it out when I saw the previs.”

SPIDER SHOTS

The story evolved over the course of principal photography with Spider (Jack Champion), the human adopted son of Jake Sully and Neytiri, getting more screentime. “We had so many different environments that Spider was in and it was critical to embed Spider into the fabric of the scene. There were little issues we would work out and some of them you didn’t see until you actually saw Spider in a virtual environment with our Simulcam system. There is so much time consuming coordination that has to be done to get the camera melded with the exact position of Jim’s virtual camera. It takes about 40 minutes to get everything up and and running, and then we are working on the lighting. We have background players come in and stand-ins quite good at miming where Spider is supposed to go. On a good day Jim would come in and say, ‘Looks good to me. Let’s shoot.’ Let’s shoot meant that we probably had an hour and half or two hours. And then he would work his way up to, ‘This is great.’”

In an effort to make the handheld Steadicam rig lighter, Cameron and Sony spent extensive time developing the Sony Rialto Camera Extension System for the Sony Venice. “On the Steadicam rig you only have the camera block, and all of the processing would go through an 18-foot cable that would be processed by somebody carrying the rest of the camera on their back,” explains Carpenter. “That cut the weight of the camera down quite a bit. Zoom lenses are notoriously heavy and we still had to find matching zoom lenses for our lightweight rigs. We discovered a prosumer line of zooms that Fujinon introduced recently – their MK series. The MK lenses are eight inches long and each one weighs just 2.2 pounds. The amazing thing was they were just as sharp as the top-of-the-line Fuji lenses.”

Most of the footage was captured in 21mm to 24mm range, sometimes up to 35 mm. “The bulk of the shooting was done on the Fujinon MK18-55mm T2.9 lens and in the world of shooting 3D you want to stay on the wider lenses,” the DP notes. “The other one went from 50mm to 135mm. We barely got on that lens at all.”

It was important to avoid having 30 different configurations of cameras. “We said, ‘Okay, we have our workhorse cameras. One is going to be Steadicam and the other is our handheld.’ Then we have our dolly camera, and we are also going to have our Scorpio crane camera. Those could be longer lenses. We didn’t have to worry about the weight on those. Those turned out to be Fuji Cabrio lenses that were 19mm to 90mm.”

The native stereo image had to accommodate the IMAX format. “Instead of centre punching our frame we moved our target area slightly higher to accommodate the fact that somewhere along the line there were going to be both IMAX prints and 2:39 prints,” states Carpenter. “When shooting, we made sure the framing made sense for both aspect ratios.”

It has been quite an education collaborating with Cameron over the course of three movies. “True Lies was a major challenge for me, and I was green when that happened. Titanic was hard. We were surrounded by water all the time. It takes it out of you. And there was ‘bigness’ of Titanic. Here it was technical complexity and I’m not a technical guy, but I know people who are.”

Imagining the final shape of the film was difficult for Carpenter. “Each day’s shooting was complex and time consuming. I didn’t have that payoff at the end of the day like I might on a more conventional film of saying, ‘That was such a great scene. I loved watching the actors perform.’ You don’t get that, but I did experience a huge payoff much later when I saw the whole movie as rendered by WETA FX and I’m going, ‘Wow.’”