REALTIME crafts real-time environment with virtual camera tech for Doctor Who: Wild Blue Yonder

Feb 2, 2024

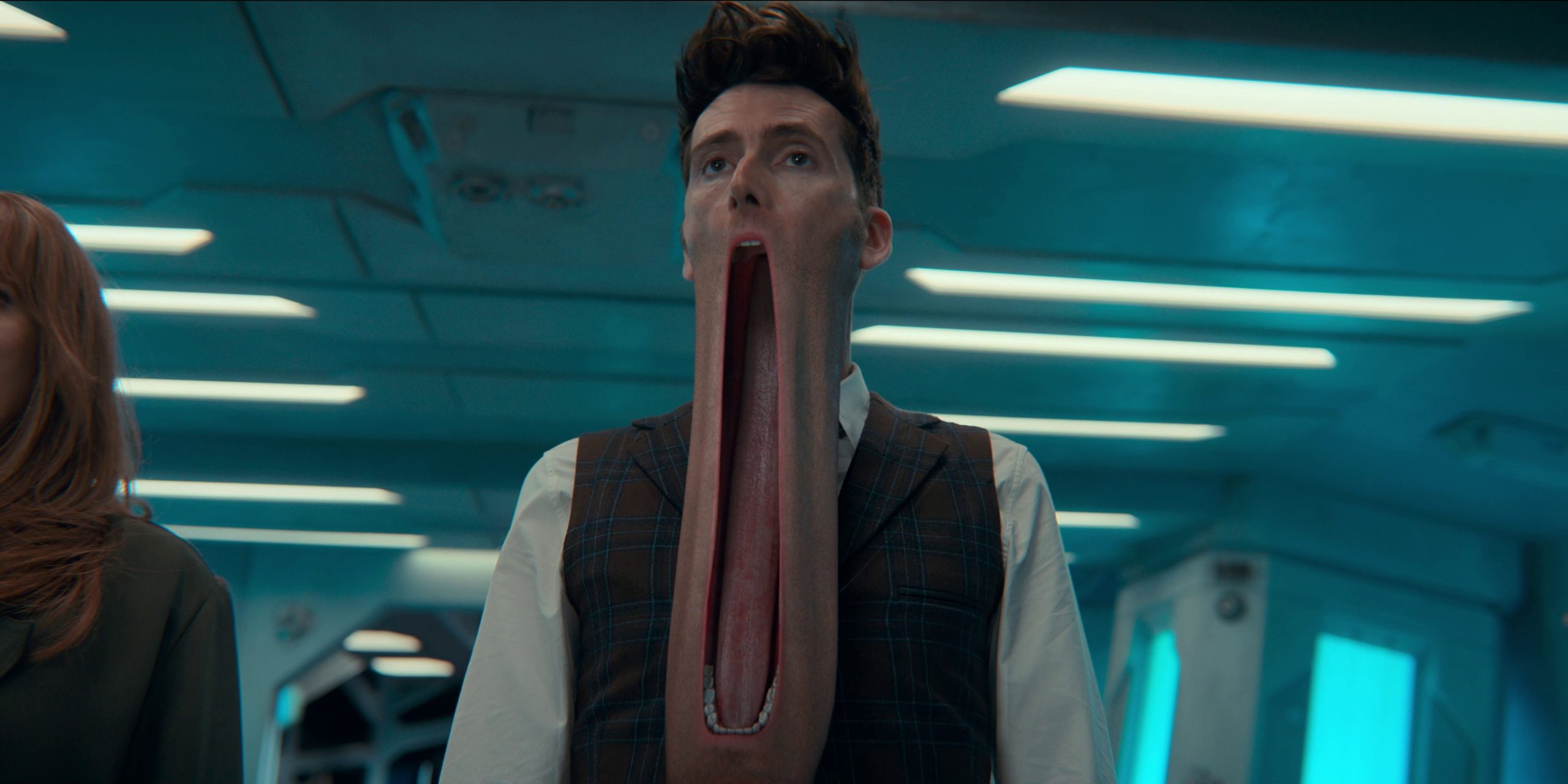

REALTIME, the UK-based CG studio, recently worked on Doctor Who: Wild Blue Yonder, one of three special episodes to commemorate the 60th anniversary of the show. Collaborating with Bad Wolf, REALTIME provided virtual production services and VFX for the hour-long episode, which sees the return of the much-loved pairing of David Tennant in the role of The Doctor and Catherine Tate as Donna.

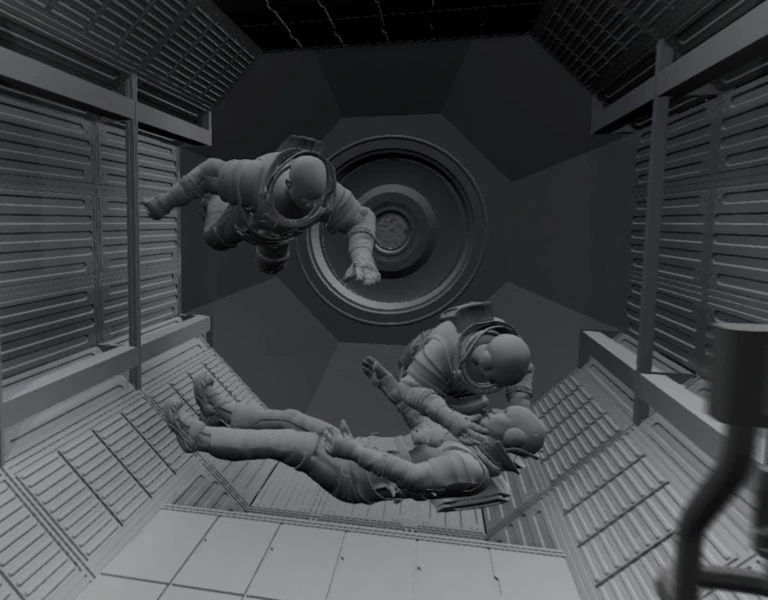

The ambitious script, written by showrunner Russell T Davies, is mostly set within the corridor of a ghost spaceship, floating at the very edge of the universe. Led by VFX supervisor James Coore, the REALTIME team crafted a real-time environment with virtual camera tech, to allow director, Tom Kingsley and DP, Nick Dance BSC, to see a live key with the talent comped onto an Unreal environment.

James Coore talks through the process, from design to final pixel:

Design Phase

We were initially approached by Badwolf’s Executive Producers, Joel Collins, and Phil Collinson, and VFX Supervisor, Dan May (Painting Practice) to devise a methodology for creating and rendering a large-scale CG environment much more quickly than usual whilst relieving pressure from the budget. We decided to create two digital environments in Unreal Engine; one to be used as a virtual production asset during the shoot, and a corresponding high detail version of the same environment for the final background renders. All the final renders went through an automated process, using the EDL from the locked edit. This was a process created by Mo-Sys using their NearTime rendering system.

Shoot

Mo-Sys provided the live tracking functionality through their “StarTracker” system, an array of stickers they attach to the ceiling of the stage. In addition, the lens was also calibrated to map the lens distortion. The calibration time for each lens was approximately 15 minutes. To limit the impact on shoot reset times, we opted to use just two zoom lenses for the entire shoot. REALTIME and Mo-Sys both have experience in handling zoom data, so we could accommodate this option.

The only element of the set we had in camera was a small section of floor with a green screen surrounding it. On set through the monitors, we could see a live key with talent comped onto the Unreal environment. The transcoded clip for the clean plate and the corresponding “dirty” clip with live comp had matching timecodes, which was extremely helpful when it came to final VFX. We had recently worked in conjunction with Mo-Sys on Dance Monsters; a deeply technical project of a much larger scale (over 5000 shots of VFX!) We were able to collaborate to improve functionality we had previously developed, the most important of these being the tools developed to ingest the camera and lens distortion data from Unreal and port to other DCCs.

The Build

The ship was designed by production designer, Phil Sims, and the team at Painting Practice. We took their initial C4D scenes and rebuilt and retopologised each component. As we had proposed, we assembled two versions of the environment in UE. A real-time version as a virtual camera and a higher fidelity version with an identical layout, which we used to render the final background image.

We initially built the environment in Maya, rendering it using Arnold. For the virtual camera, we baked the lighting from this into texture maps, which we then applied to the geometry in a corresponding Unreal scene. This gave us an efficient real-time scene which would perform fast enough to be used in real-time monitoring and as a means of record and playback during the shoot.

Once the edit was locked, MoSys was able to use the timecode and clip information and automatically generate a render scene, swapping from the real-time to a high-resolution Unreal environment. In the render setup, we only rendered a limited number of AOVs; Depth, Crypto-mattes, and ST map for the lens distortion.

Virtual camera vs. LED screens

The decision to not use conventional LED volumes was taken quickly, due to the nature of the show and time restraints of the production. Using LED screens takes a lot of planning and testing, and every physical set piece or prop shot against the screen must be scanned and reconstructed digitally, so the pre-production schedule will be longer. Camera tracking is technically complex and can require a lot of resources to get it set up and keep it running, and colour-management can also be an issue. Any in-camera VFX also needs to be able to work alongside traditional VFX. From our side, it became clear that we needed to help production shoot as seamlessly as possible, providing a solution that helps them to visualise the environment without needing to use LED volumes. Just because they are available to use, does not mean that you have to use them for this kind of show – sometimes you need to go in a different direction, and think outside of the box.

However, saying that, we are collaborating with Nick Dance to look into a technique where we use an LED volume, but only for lighting outside of the cameras frustum, retaining a constant green colour behind the talent but as part of the LED display. Giving us a perfect greenscreen while not having to commit to the contents of the LED and retaining the flexibility to change the background at a later stage. We are due to test this early this year.

Benefits

Having the functionality to frame the shot with a real-time background during the shoot is invaluable for the crew on set. It also makes realigning the shot in post-production much easier and gives a frame of reference for the whole team to understand.

Rendering in Unreal meant that our renders were 4 minutes a frame, as opposed to between 4 to 8 hours depending on shot/scene complexity.

The temporary live comps created during the shoot makes the edit process much easier. Particularly on a show where every shot has a CG background. It stops the client having to sign off an assembly which is entirely green screen. This is as much of a benefit to the production team as it is to the VFX vendors. I am always excited to be able to aid productions in that way.

Nick Dance BSC adds:

Like all shoots with a high visual effects content, it’s vital to have a good rapport between the VFX team and the cinematographer. Collaboration is key. It was my first time working with James, Unreal and Mo-Sys, so it was incredibly important we were all on the same page. But with testing, pre-vis and intensive prep, this all contributed a smooth shoot.

The main advantage for me was the ability to see the background superimposed live over green screen. It was invaluable for composition and any lighting changes/interaction that needed to match and be in sync with the actors and the backgrounds. It was also great for the actors as they could see their positions on the virtual sets and react to them, which helped with performance.

We were mainly on a Technocrane, shooting on an ARRI LF. We decided to use two short Zeiss Cinema Zooms, the 15-30 and 28-80. This decision was mainly to save time on lens changes, but it also gave us the flexibility to change shot size and see the effect on the background instantly. With Mo-Sys and Unreal, wherever we panned, tilted or zoomed, the background moved accordingly with the correct lens angles and perspective change – quite astonishing!

Although a steep learning curve, it was an exciting challenge to help realise Russell’s very ambitious, creative and large-scale production. I really don’t think there was any other way to achieve this scale other than the use of Unreal and Mo-Sys. It was a thrill to work with this virtual system and collaborate with the REALTIME VFX team, including James, our director Tom and art department on this very special 60th anniversary episode.”

James Uren, Mo-Sys adds:

“Tracking the camera and recording the camera’s pose and lens data on-set very precisely brings two big benefits. Mo-Sys VP Pro allows the production crew to see a live pre-visualisation – aiding blocking, framing and lighting. To render the whole show in a traditional way would have been time-consuming and cost-prohibitive. Here, REALTIME were able to utilise Mo-Sys’ NearTime service to automatically re-render a large number of VFX-heavy shots in Unreal Engine, saving time, maximising quality and controlling cost.”

REALTIME is an award-winning CG specialist working in the episodic, automotive and games industries. Wild Blue Yonder is available to watch on BBC iPlayer and on Disney+ globally outside of the UK and Ireland.