Dimension Studio drives virtual production workflows with AJA KONA 5 Capture Cards

Feb 11, 2022

Virtual production is transforming the way film, episodic, and live broadcast content is captured and created. On the cusp of volumetric capture powered by real-time game engines, Dimension Studio bridges creativity and technology to help clients produce world-class content through virtual production workflows. With Jim Geduldick, a cutting-edge technologist and industry veteran, at the helm of operations, Dimension Studio North America is redefining traditional production pipelines with the latest real-time technologies.

From rear and front projection, to use of trans lights for interactive lighting, virtual production technologies have been used across the media and entertainment industry for decades. Rapid evolution of these technologies in recent years has led to widespread adoption of real-time workflows. Game engines, like Unreal, Unity and CryEngine, have experienced monumental advancements in terms of capabilities for use in real-time workflows, further supported by upgraded GPUs and enhanced video I/O technologies.

Geduldick remarked, “For creatives and technologists, we now have a robust toolset that allows us to be more proactive with workflows, blending between previs and the virtual art department. The speed at which we can iterate with real-time tools and go from pixel to hardware and up to an LED or traditional greenscreen has rapidly evolved. If you look at the past 18 months alone, virtual production has garnered a lot of excitement as to the current and future possibilities it holds.”

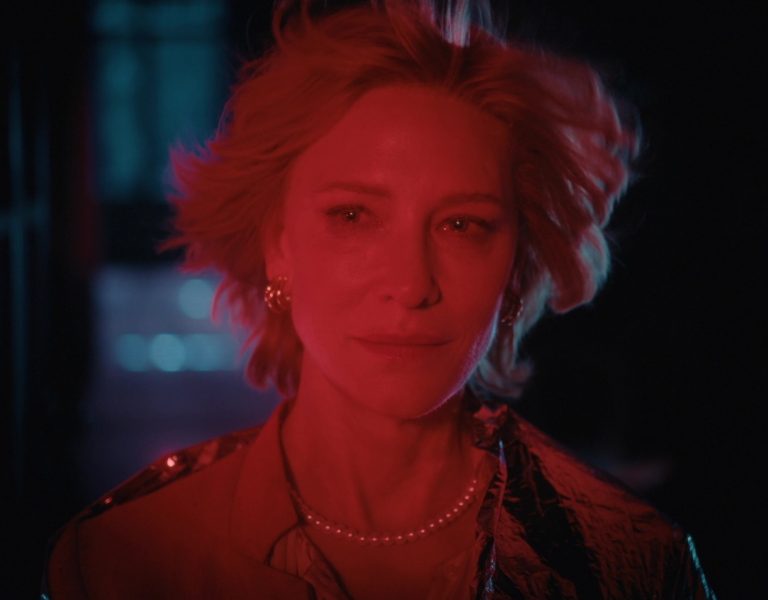

As a virtual production supervisor, Geduldick’s position blends the traditional roles of VFX Supervisor and DP, while acting as a bridge between different departments to ensure that digital and physical set elements mesh together in real-time during live productions. He leads Dimension’s virtual production team and Brain Bar, a term coined by production on “The Mandalorian,” defined as the team of technologists, engineers, and creatives who collaborate on-set and drive pixels onto stage LED walls.

On set, pairing a real-time game engine with AJA’s KONA 5 high-performance PCIe video I/O card allows Geduldick’s team to mesh live elements and CG assets with virtual environments in real-time. He added, “The essence of real-time workflows is that we’re not waiting for rendering like we used to. Instead, we’re pushing as much down the pipe through AJA hardware and other solutions to make everything as quick and interactive upfront as possible. AJA’s KONA 5 capture cards are our workhorses, as we use them to send high-bandwidth, raytraced assets with ease. This ultimately allows us to make on-the-fly creative changes during production, saving our clients time and money because we’re capturing in-camera, final effects, rather than waiting until post.”

For Geduldick’s clients, leveraging real-time virtual production workflows affords a wide range of advantages. Transitioning decision-making processes to the frontend of production requires prudent planning and previs, resulting in cost savings. For critics of virtual production workflows, Geduldick offers a contrary point of view that highlights workflow freedoms. He shared, “For continuity and budgetary considerations, shooting on an LED volume offers the ability to capture the same time of day with the same color hue at any given time, allowing actors and crew members to focus more on the performance. You can also switch up your load (or scenes on the volume) and jump around. For example, if you need to do a pickup or if the screenwriter or director adds new lines, you can load the environment, move the set pieces back into place and shoot the scene. No permits or challenging reshoots are required, freeing up time for teams more on the creative aspects of production, rather than the logistics.”

To ensure that even the most ambitious projects run without a hitch, Geduldick safeguards productions in case of accidents, power outages, or downtime. “Depending on the workflow that we’re using, I might have the engine record certain graphics or metadata coming in from sources. For example, if you have background and foreground elements via a Simulcam workflow, being able to record that composited image as a safety for editorial dailies and VFX is very helpful,” he shared. “I’ve also found that being able to ingest back into our systems through AJA I/O hardware — like KONA 5 — leaves us options to send a signal downstream, if we need it. This safety allows directors, VFX supervisors, and anyone on set to request and view playback through our system. It’s not a requirement, but since it takes a village to create a TV show, indie film, documentary, or even a live broadcast, you never know when you may be called on at a very integral time.”

As technologies are becoming more accessible and continue to advance, Geduldick is optimistic about the future possibilities for the industry. He concluded, “The next evolution of virtual production tools will help innovate the way narratives are driven and help us tell stories in more impactful ways. The north star is the continual merging of imagination and possibilities, and there are so many resources available to learn how to leverage real-time technologies. There’s never been a richer time for self-education, so I encourage anyone interested in virtual production to dive in and apply a bit of time and effort.”