DP Kirk Morgan and director Rob Smith on music video “Faces in the Light”

Feb 6, 2025

Please share an outline of the production?

“Faces in the light” is a music video for South African electronic rap group, PHFAT, featuring Nazieg.

As the track itself is about the obsessive and often surreal nature of our digital consumption, we wanted to find a way to bring that to life visually. The result is an infinite black space where our minds go to numb and disappear. Where we’re surrounded by everything and nothing at the same time.

Executed using the DJI Inspire 3 RTK system, which is essentially motion-control in the sky, we were able to shoot a one shot music video in multiple layers, with(almost) frame accuracy.

How different was the production from previous projects?

The production was very different in that the actual shoot was very small and contained, requiring very few actual takes once the system was set up. The pre-production, however, was a lot more demanding. We had to test and plan with a system that simply is not designed to be used how we used it, so there were many test days and we produced a very precise animatic in line with those tests.

For example, a major learning was that if the drone move is too dramatic or vertically quick, the sync between the layers goes off temporarily, before returning to its GPS coordinate once it settles.

Through this learning we planned the parts of the song where we wanted duplication to be more slow and steady, allowing for easier compositing.

That being said, the sync, although incredibly close, was not always perfect, and therefore some tracking was necessary to align these layers so that there were no “slipping feet.”

What brief did the artist share about what they wanted from the music video? How did the director articulate what they were looking for?

The director, Rob, has worked with PHFAT on 3 other music videos previously, and I was involved in the last one “Catherine” (which was also motion control based, but on the ground). As a result, there really wasn’t much in terms of a brief, as the trust was already there. He, the rapper Mike Zietsman, showed Rob some of their newer songs and was open to whichever track spoke to him. This one did, and despite some skepticism if it would be technically possible, was very supportive of the concept and how it tied together with their song.

How did you decide upon the visual language?

We knew we wanted to use the DJI Inspire RTK system in an interesting way, but at the same time we didn’t want it to feel too clean and clinical. PHFAT’s aesthetic is more dark and edgy (and so is the song), so we wanted to align with that visually and make sure it didn’t appear overly refined. Even if it was precise, it needed to look a little rough around the edges, so choosing the right location (an abandoned airstrip/race track) and shooting at night was important. Using a second drone with a spotlight, other moving lights (thanks to gaffer Warrick Le Seur) and dynamic choreography(Berlin Williams) and styling(Kaley Meyer), allowed us to create a look which felt more sinister. This was later emphasized by holographic FX(Luke Veysie) and a film emulated grade by Kyle Stroebel at The Refinery.

How did you go about devising the shot list?

We mapped out the entire sequence using 3D Lego people in Blender prior to the shoot. This changed according to our tests, but by the time we got to set we literally just incorporated the 3d animatic into reality according to time and it all worked out perfectly with the music.

However, the DJI system does not work like normal motion control systems, where you set the key points and then the time it takes to get there. Instead you set the key points and then the speed at which it moves between them, which lead to a lot of trial and error to get right on the day in line with the animatic and song. But we got there just in time for sunset.

This is the first motion control video shot from a drone. How did you select which equipment to work and what led you to the DJI Inspire 3 with RTK system?

The director, Rob, is one of the founders of Big Bird – so has been enveloped by the drone world since shooting with RC helicopters in 2012. This new system by DJI is the only system he and I have seen that could come anywhere close to achieving this. Big Bird had used this system on shots in multiple commercials, often for changing from day to night in shot, so this was a progression of that idea – how far could we take it?

What other cameras and lenses did you use and why? Who supplied them?

We shot with the Inspires X9-8K Full frame camera and the DJI DL 35mm F2.8 LF ASPH lens supplied by Big Bird. The X9 camera with its 8k resolution allowed us to get the cleanest footage we could while shooting in low light conditions. Even though post emulation/ film grain would be added after the VFX was complete, a clean image was important for helping with the roto-work process. The 8k resolution allowed us to line up/ reframe at points of motion control slippage between the layers. It also allowed a solution to digitally push in closer to the performance than we felt comfortable doing with the drone while still holding decent image quality. We experimented with wider and tighter lens choices in the testing phase and ended up with the 35mm as a sweet-spot to get close enough to the action while still allowing for faster pull outs. This was important for the video as it allowed for the opportunity to reveal and conceal the dancers in a dynamic way as the video progressed.

How did you work with drone company Big Bird for the aerial sequences?

As mentioned, Rob has been a part of Big Bird from the beginning, and although not involved in the day-to-day operation of it anymore (due to focusing on directing), has a pretty good technical understanding of it all – but Jonno Searle – the co-founder of Big Bird was involved from day one to strategise and figure this out with us. They played a major role in helping figure out limitations in the testing phase, and came up with creative ways to overcome problems we had, such as the time vs. speed dilemma in setting the key frames. Almost their entire team was involved on the shoot day as we had numerous drones up in the air at once, all working together in a coordinated dance of camera and light movement to bring the piece together.

How did you go about devising the lighting schemes and what fixtures did you use and why? Did you have to create any custom camera and lighting rigs?

When approaching the lighting plan for this project, it definitely got the brain ticking, as the concept allowed the chance to really experiment with light. However, a key factor was that we would see 360 degrees, so where to place lamps at what point of the track became the puzzle. We wanted to play into the darker/ grungier, look so we kept the light predominantly single source and back or side lit throughout. To achieve this throughout the rotational camera move, a drone light was the obvious answer as our hero source to counter the camera move.

We made use of Big Birds custom 400W LED with parabolic reflectors to narrow the beam/ spill, allowing the background to vignette into darkness. The rig is flown on a Alta 8 with dual operators for drone and light/gimbal. The moving drone light created strong shadows that moved around Mike and the dancers like the arms of a clock passing time.

We had a second light source for the lower moving side light stuff that was a battery powered Aputure 600C pro rigged to an electric go-kart rig that circled the dancers at a section of the song.

Lastly we had an Aputure CS15 with a 36 degree spotlight, rigged high on a stand as a backlight for the finale of the piece. All the heads, apart from the drone light, were controlled via CRMX and set scenes on Luminair allowed us to transition between the lamps. The drone light was triggered on and off manually via RC remote at musical cues.

There were so many moving parts and timings to come together for the piece but, due to a great team and pre-viz planning, we got there in the end.

What challenges did you encounter when shooting the project and how did you overcome those?

The main issue we encountered was the second drone with the drone light, which was not motion controlled. Because where it shone wasn’t always accurate, the backgrounds’ lighting was always slightly different in the plates, making compositing our layers tricky! But not impossible.

After many hours in After Effects, VFX artist Luke Veysie, with some help from me, cracked it.

How did you decide upon the colour palette and LUTs? Was there much in way of changes in the DI and which colorist were you working with?

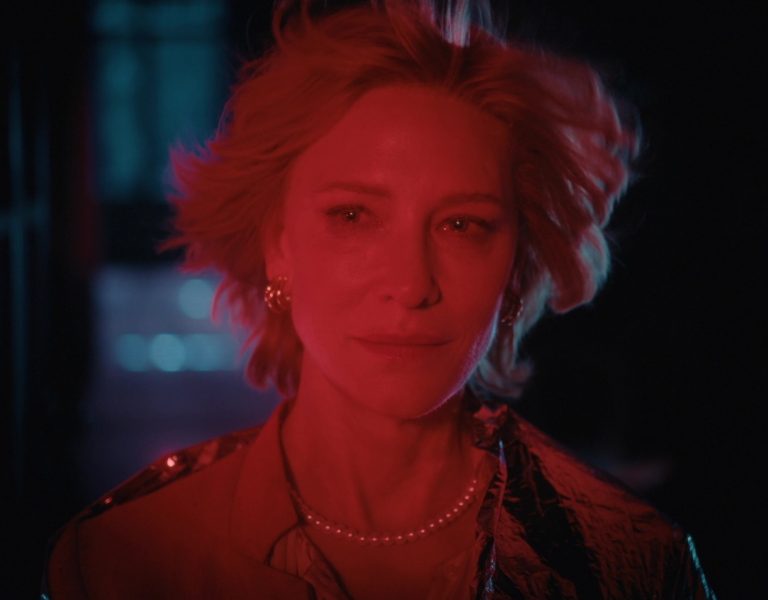

The colour palette leaned into the blues of the concept of the holograms/ illuminated screens and reds of the car and lines of the race track environment that added a subconscious danger element. These reds were graded to match and pop without being overly saturated. Blues were added to the shadows in the grade while holding skin tones. Contrast levels were tweaked to add a roll-off into the shadows. Kyle Stroebel (Colorist) from The Refinery Cape Town worked his magic and brought the tone of the piece together. Each layer was pre-graded and then once VFX work was completed it returned to him for a final pass of post film emulation.

Is there a particular shot or sequence you are most proud of?

The end sequence with the cars drifting around him was particularly crazy to see come to life so accurately, thanks to the brilliant driving of Quniton Robertson.

He did different takes apart from each other and in different directions with incredible precision, which really elevated the video at its climax.

What lessons did this production teach you?

There really is no such thing as over-preparing for a shoot – it made the actual execution a joy for everyone to work on and the results speak for themselves.