Papertown founder talks working with Mo-Sys

Jul 30, 2021

Business Made Simple is one of the most successful management coaching organisations in the US. It creates a broad range of content to help its subscribers bring dynamism to their own careers.

For a new video series, Business Made Simple’s Donald Miller came up with the analogy that every business should run like an airplane: executive leadership in the cockpit; marketing and sales the two engines; and so on.

To create these videos Business Made Simple looked at a lot of ideas. They seriously considered renting a Boeing 737, wrapping it in their corporate identity, and filming the sessions in a hangar.

Creative production agency Papertown Entertainment (papertown.tv) came up with a modern, sophisticated and rather more cost-effective solution. Executive Creative Director Julian Smith and producer Tyler Torti would create the plane and the hangar entirely in CGI, and key the speaker onto the image.

Papertown had a very clear idea of what would set this series apart: definitely not a static man sitting and talking in front of a parked plane. They wanted to be able to move freely around both the speaker and the plane to maintain viewer engagement while the key messages are delivered, underlying the key messages with the right visuals.

“Even ignoring the cost of renting and dressing a plane, having the real thing brings all sorts of other problems,” said Papertown founder and ECD Julian Smith. “Lighting in a hangar, and trying to get clean audio would have taken time and been risky.”

Papertown are very experienced in CGI and the concepts of mixed reality. “And we were aware of things like [Disney series] The Mandalorian, where they shot real time, final composites. That seemed like the way to go.”

The key to linking it altogether was Mo-Sys StarTracker and Mo-Sys VP Pro. The StarTracker is a precision camera tracking system, which scans a random pattern of retro-reflective stickers, or ‘stars’ as they are known, on the ceiling. Once the StarTracker sensor attached to the production camera learns the pattern, the operator is free to move anywhere in 3D space, and to pan, tilt, roll, zoom and focus the camera, while StarTracker accurately keeps track of everything.

In Papertown’s case the graphics engine used was Epic’s Unreal (UE) Engine. Mo-Sys VP Pro software is integrated into UE, and provides additional functionality and workflows that make virtual production simpler and more efficient. Unreal Engine is a powerful photo-realistic graphics engine and was the natural choice for real-time virtual graphics, including the plane and the hangar. StarTracker and VP Pro working seamlessly together was the key to making this project work.

“Papertown knows world class CGI. But Mo-Sys’ camera technology blew our minds, blew the client’s mind,” Smith said. “The shoot felt like a party.”

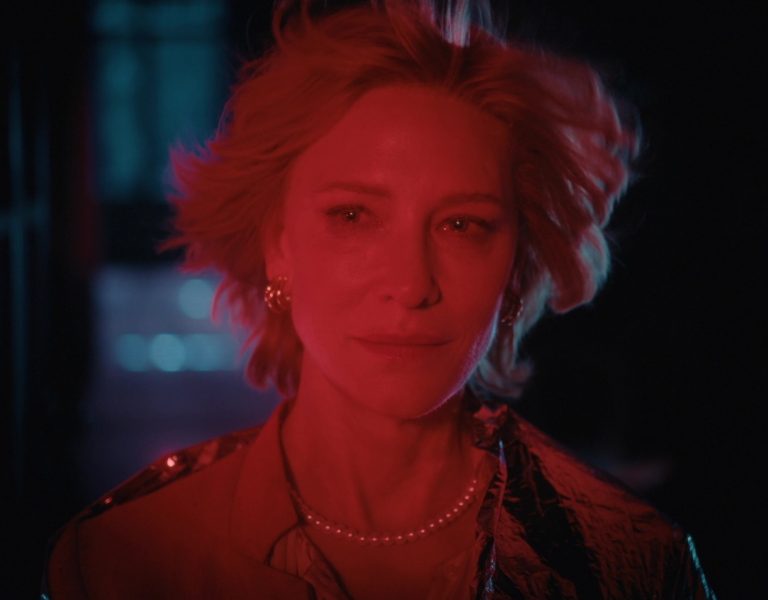

“Donald was shot against a green screen, with the camera on the shoulder,” he added. “Yet we walked away from set with finals – we were recording the composite of live action and CGI, in real time, with no need for post-production.”

Because the virtual scene is generated in real time, the camera operator doesn’t just see the talent against a green screen, but the finished composite. Framing never needs to be guessed as you can see what you are shooting just as if it really was a physical plane in a hangar.

The perfect integration between Mo-Sys StarTracker, Mo-Sys VP Pro and Unreal Engine means that whatever the camera operator does is instantly matched in the CGI. As the camera moves around the speaker, even handheld, the parallax with the plane remains perfect. The operator can even pull focus from real to virtual objects.

“Being able to use StarTracker, VP Pro and Unreal extended an insane amount of value to our clients,” Smith said. “It took our photo-real CGI to a whole new level. This is a game changer.”

The production took place in February 2021, so covid regulations were another big consideration for the team. The shoot itself took place in Nashville, but many members of the team were able to contribute remotely.

“No-one had ever attempted this level of mixed reality in Nashville before,” Smith said. “We understood all the components needed, and we had to pull them together from all over the world. We had no experience with Mo-Sys before this shoot, but we didn’t need any support on set: installing it, lining up the cameras and interfacing to Unreal was very straightforward.”

The result was that Papertown was able to shoot the three hour video series in just two days on set. That is incredible productivity, enabled by being able to see what was being shot in both real and virtual worlds, and recorded as a combined output.

Looking to the future, Smith said “Audiences expect more than ever from their content. As creators we have to find new ways to provide value. Clients come to us to ask what is possible. The sky is the limit now.”

“Mo-Sys’ products unlock so much potential, as this production shows. There are no more excuses for brands and creators, and that is exciting.”