A Near Real-Time workflow brings together key advancements in lens calibration, machine learning and real-time rendering to deliver higher quality composites of what was just shot to the filmmakers, in a matter of minutes.

The artistry of cinema has always advanced when filmmakers push the limits of technology. New film Comandante is breaking new ground. It has devised a novel Near Real-Time (NRT) workflow which allows post-production to start live during shooting.

Director Edoardo de Angelis’ film has been in pre-production for over a year but by the time of photography this November, the team will be confident that they can achieve every shot having seen a high-quality render in NRT.

Comandante follows the true story of an Italian submarine commander in World War II. Doing that for real, or with traditional VFX techniques, can be expensive. Instead, the production opted to shoot on a water tank with LED screen to capture the integral light and reflections needed to make the shots realistic.

Producer Pierpaolo Verga brought on board visual effects designer Kevin Tod Haug (Fight Club) who enlisted other collaborators in virtual production, lensing and VFX, notably David Stump ASC.

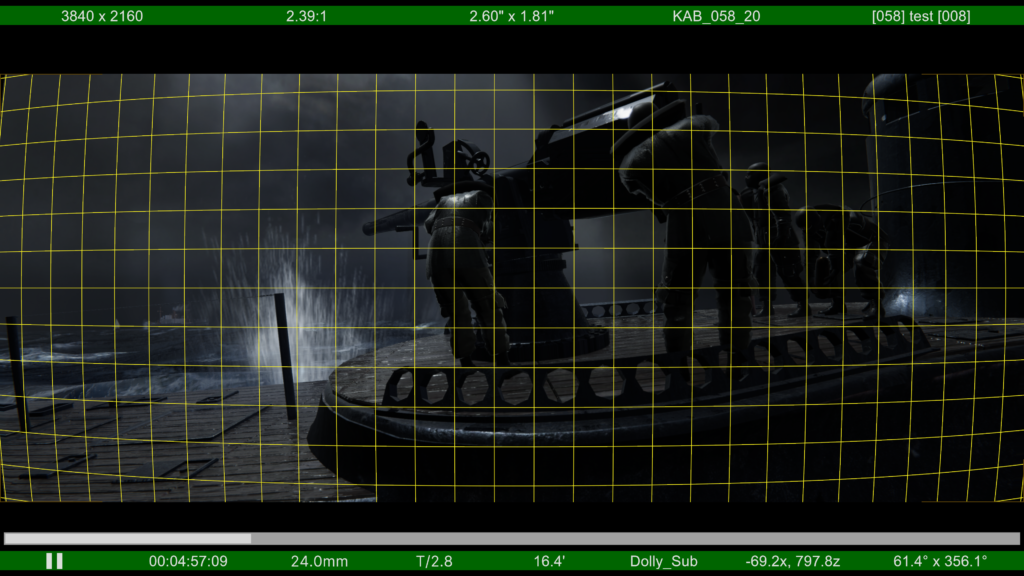

The show required water resistant outdoor LED panels to be used yet these lower resolution (higher pixel pitch) panels are unsuitable for use in ICVFX due to the severe moiré patterns they create.

“The challenge was how to keep the rich, dynamic light cast on the talent, water and physical set, and replace the section of LED seen through the camera with a higher quality version?” explains Haug. “Additionally, we wanted to do this quickly enough on-set, so that director and DP can be confident in seeing what they’ve shot, make the right selects and walk away knowing they’ve got it.”

Comandante has gone through a number of R&D phases to test the workflow. The idea is that complex scenes (for example, actors needing to be placed on a submarine in the middle of the ocean, but actually filmed in a dock) can be filmed, with new tools allowing the director and other filmmakers to get to close-to-final or final template shots almost in real-time.

To cap it all, De Angelis wanted to shoot large format with ARRI Mini and anamorphic optics.

“This is hell under any circumstances for VFX since everything is shallow focus and warped and hard to track,” says Haug. “Because many of the scenes are set in darkness, with water effects and a moving boat we were not expecting to create a volume in a typical sense. We are leaning heavily into being able to pull metadata.”

A SUMMARY OF NRT WORKFLOW

During filming, all camera metadata and lens information is recorded from the camera and streamed into Epic Games’ Unreal. This data was then fed into Nuke. The UnrealReader tool in Nuke enabled the filmmakers to re-render the background in high quality, obviating the need for the higher-resolution LED walls.

The virtual and physical are merged in Nuke’s CopyCat tool, which uses machine learning to separate out the LED wall from the actors. By re-rendering using the true recorded values of the tracked camera’s position, the lag between tracking and real-time render is removed, allowing the filmmakers freedom over their shot movements.

LENS CALIBRATION AND METADATA

Central to marrying the real and virtual is modelling the physical lens, allowing the filmmakers to distort the Unreal render (or undistort the live action plate.) Instead of shooting lens grids, the team deployed Cooke Optics to use the accurate factory lens mapping of its /i Technology system, which embeds distortion and shading parameters into each lens itself.

“Until now the ultimate success of putting a lens into a CG pipeline has been to simply ensure the frame lines correct,” says Stump, who is advising Comandante’s DP Ferran Paredes Rubio. “The standard approach is to ignore what happens inside of any real-world lens in terms of how it bends light onto a rectangular sensor. Yet the technology to do exactly this is right before our eyes.

“We take the Cooke coefficients and apply them automatically to the image enabling us to get highly accurate distortion maps, even as the lens ‘breathes’.”

The result was astonishing: the aspect ratios of the checkers were perfectly consistent across the entire grid. “The goal is that the director can move on to the next set-up with confidence,” says Haug. “That requires that the image they see is not a fudge between a spherical reference coming out of an Unreal Engine and the actual anamorphic from the camera. Using Cooke 40mm anamorphic with /i Technology we’re able to lock in the comp of CG and live in Qtake because the output of Unreal is now warped to precisely match the output of the camera.”

“When you see this live it is dead obvious,” adds Stump. “Everyone has watched superhero movies and noticed that there’s just something a little wrong about the way an animated character fits in the frame. NRT will change that because CG characters will look and feel like they were photographed through the same lens at the same time as ‘real’ objects.”

SCREEN FREE VP

The second ‘Aha’ moment for the production was realising the potential of Foundry’s AI roto tool CopyCat. After training CopyCat, the LED wall can be removed during photography. In theory this means that scenes could be shot on location with backgrounds replaced and comped in near real time without using LED screens at all.

Says Haug, “There was a point in testing when Copycat had got sophisticated enough to understand the difference between foreground and background and would replace the background with the Unreal environment even if the camera was pointing outside the wall. In our scenario the wall is just a window to the environment.”

NEW PARADIGM

The techniques pioneered on Comandante are set to change the way filmmakers work with real-time, giving directors more confidence in their takes and enabling them to push the limitations of VP further than ever before.

“NRT compresses the iterative process of finishing a film,” says Stump. “Before, we used to edit the entire movie and then plug in VFX. That interactivity is less creative than the immediacy of this workflow that gets you closer on set to what you are going to have in final. If you build the interaction into the editorial process then it’s all one big creative blend.”

The NRT workflow allows the use of lower res LED panels without the moiré artefacts usually associated with them. This offers productions the option to use more cost-effective panels, or higher contrast outdoor panels, and keep the richness of the LED light.

The workflow helps bridge the gap between on set and post. Using the recorded camera tracks and advanced lens metadata from Cooke with the UnrealReader, both on-set or offline VFX becomes easier. Also, by re-rendering the LED wall with true, timecode-accurate camera motion, latency in the VP system is mitigated.

“Hopefully a lot of the movie is finished before we’ve finished shooting it,” says Haug. “From a VFX point of view we feel we can deliver all these shots by the end of the director’s cut.”

NRT ensures that all the metadata needed for a shoot is tracked and stored in an efficient way as it is crucial to have that information to bring the VFX elements in and show the director the work in real-time.

Comandate commences principal photography in November.

–

i/Technology

Cooke’s ‘Intelligent’ Technology is a metadata protocol that enables film and digital cinema cameras to automatically record key lens data for each frame shot which can then be provided to Visual Effects teams digitally.

Equipment identification is by the serial number and lens type, with data including focal length, focus distance, zoom position, near and far focus, hyper focal distance, T-stop, horizontal field of view, entrance pupil position, inertial tracking, distortion, and shading data. Information can be digitally stored for every frame, at any frame rate up to 285 fps.

The electronics inside each /i lens connects to resistive sensing strips calibrated in absolute values. As soon as the power is applied, the data is available instantly without the need for an initialisation procedure. The potential for human error is removed because there is no longer a need for the script supervisor to manually write down lens settings for every shot.

/i Technology is an open protocol fully integrated across Cooke Optics’ lens range and supported by industry partners including ARRI, RED and Sony.

It is designed to help save time and resource in post-production by providing precise data in the most accessible way. Digital cameras automatically record key lens information for every frame shot, with this information then being provided to VFX teams digitally.

Post-production artists can sync the lens data to the 3D camera data to produce a more natural looking 3D model, while enjoying peace of mind that data is of the highest accuracy

–

This article was sponsored by Cooke Optics.

Words by Adrian Pennington.

Images: Habib Zargarpour at Unity