Being able to obtain shading and distortion metadata is vital to fast, accurate and complex shot creation for VFX and virtual production.

Tracking metadata efficiently from capture downstream through post-production has long been a vexing issue and one the rapid rise of Virtual Production has thrown into sharp focus.

Often, metadata doesn’t make it downstream for VFX use at all, or it arrives in a form which is unreliable or incomplete . Ideally you need to determine parameters, such as what the depth of field was, where the focus distance was set, what the lens distortion and shading characteristics were in order for 3D rendering and compositing of CG assets to match the creative look captured through the camera. On-set virtual production exacerbates this problem because the process needs to happen in real time. That’s why acquiring, encoding, and mapping camera and lens metadata has become one of the most important areas of innovation in recent times.

Lens metadata is generated by sensors within the lens itself and can be fixed information, like focal length or the firmware version of the lens, as well as dynamic information such as calibrated focusing distance and t-stop value, depth of field and hyperfocal distance, horizontal field of view and entrance pupil position.

This data is communicated via the Cooke/i* technology, which is one of the pioneers in this space, but there are two crucial elements not currently included: shading and distortion data.

With the launch of ZEISS eXtended Data in 2017 and the release of the new CinCraft Mapper platform in 2022, ZEISS now offers multiple solutions for obtaining this information, taking the Cooke/i* protocol a stage further and enabling users to obtain frame-accurate shading and distortion data, both in real-time and for export for use in post-production.

It is an innovation that is vital to producing the most efficient, accurate VFX workflows.

Innovation for VFX

The two key VFX workflows where having shading and distortion data is of crucial concern are compositing and matchmoving.

Compositing

The film crew records the footage, which has distortion and shading present. A VFX artist renders the CGI assets, which have no lens characteristics at all. Then compositing software is used to combine real-live footage with CGI. Final adjustments are made to the image by adding back lens characteristics like distortion, shading and film grain.

As it stands, distortion must be removed from the live footage in order to combine it with CGI. Then re-added with shading for a realistic looking final image.

Matchmoving

The film crew moves the physical camera and records the footage. Matchmoving software replicates the physical camera movement by tracking points in the footage and generates a virtual camera with the exact same movement as the physical camera.

Once again, distortion must be removed before VFX, because it makes matchmoving inaccurate or even impossible due to it distorting the potential tracking points within the video clip.

The benefits to VFX workflows of being able to use frame-accurate shading and distortion lens data should be obvious. It takes away the guesswork of interpolation, removes one whole step in the process and means VFX artists and studios can generate complex shots faster and more accurately.

Benefits in pre-production

However, the benefits do not end there. There are advantages to pre-production too. At a minimum there’s no longer a need to shoot and subsequently analyse lens grids in order to determine the shading and distortion characteristics of your lenses – ZEISS already provide it.

Considering that shooting lens grids is a very manual process, with an element of guesswork still required to interpolate between different camera/lens settings, you can now remove the risk of human error.

Additionally, there’s no need for camera assistant or focus puller to calibrate any lenses nor generate lens data files. Since distortion, vignetting and other lens characteristics are embedded into the lens and can be recorded electronically on set for each video clip, there’s no need to shoot lens grids or grey cards for VFX. This again saves time and provides much more accurate data.

Benefits on-set

There are multiple advantages to capturing this additional metadata on set too. For example, the script or VFX supervisor does not need to protocol lens data (focal, focus, iris). This information can be recorded inside the video clip for each frame.

The camera assistant can exchange lenses faster. There’s no need to setup lens focal or type in the camera since the lens is recognised automatically.

The DP and their camera team also gain a superior overview of lens settings. On sets with multiple cameras in particular, it is essential to rapidly gauge the different configurations at one glance (from focal length and focus to iris, depth of field etc.).

What’s more, shots with a lot of movement, like car chases with cranes or Steadicam, become easy to devise and can be set-up with greater freedom by the crew.

Benefits for virtual production

The difference on a virtual production set is that the shading and distortion data is required in real-time, however the same issues and principles with the traditional VFX workflow still apply.

For example, when shooting in a VP set on a wide-angle lens, there’s going to be an element of distortion and curvature of the lens as you move away from the centre of the frame – the further you are away from the centre, the more your corners will bend, as will your walls and floor.

If the intention then is to have CG objects or characters present next to your live subjects, the slight curvature of the floor, for example, may make it appear the computer-generated imagery is floating an inch or so off the floor, because we’ve not taken the effect of the distortion in the CG world into account.

So not only do you want to be able to track the shading and distortion characteristics, but you need to apply them in real time, through a game engine, to ensure realistic final pixel on set.

You can do this now with ZEISS smart lens technology.

Summing up

The maturation of on-set virtual production techniques has offered lots of new options for content creators, but it has also reinforced the need for the industry to find better solutions to address a long-time challenge.

ZEISS has risen to this challenge and built on the ground-breaking work by Cooke, to obtain frame accurate, dynamic shading and distortion characteristics from the lens. While this is essential to helping VFX teams match CGI assets with real-life elements, the ability to automatically capture more frame-accurate data at source saves time, eliminates errors and enables the whole creative team to create a better picture.

–

How can you obtain and use this lens metadata?

There are two main routes users can take to obtain ZEISS lens metadata:

ZEISS eXtended Data

- Provides real-time shading and distortion data, but can also be captured, recorded and exported for use in post-production

- Metadata can be obtained via the external connection point on the barrel of the lens, or embedded directly into the video files via the PL mount four-pin connector (with compatible cameras)

- Using third-party accessories, such as the DCS LDT-V1, for live lens data streaming to Unreal Engine

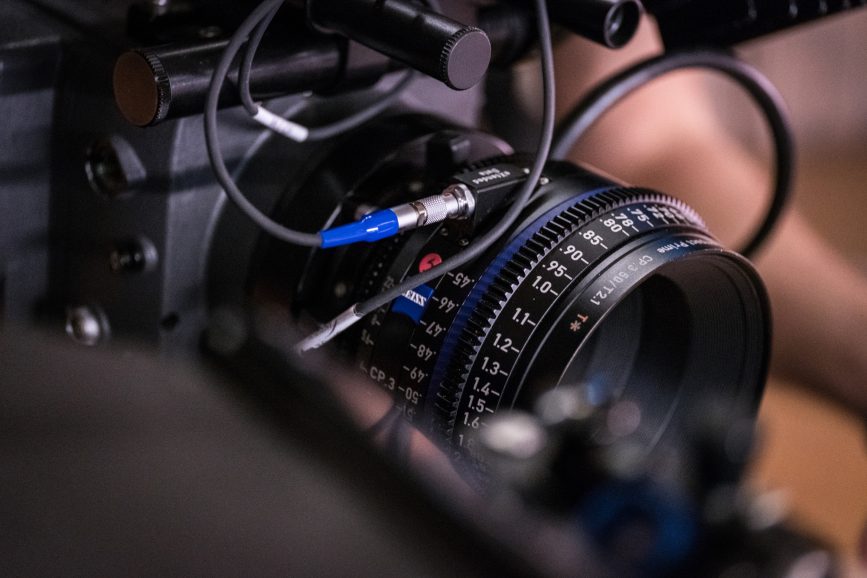

- Supreme Prime, Supreme Prime Radiance and CP.3 lens families all available with eXtended Data capability

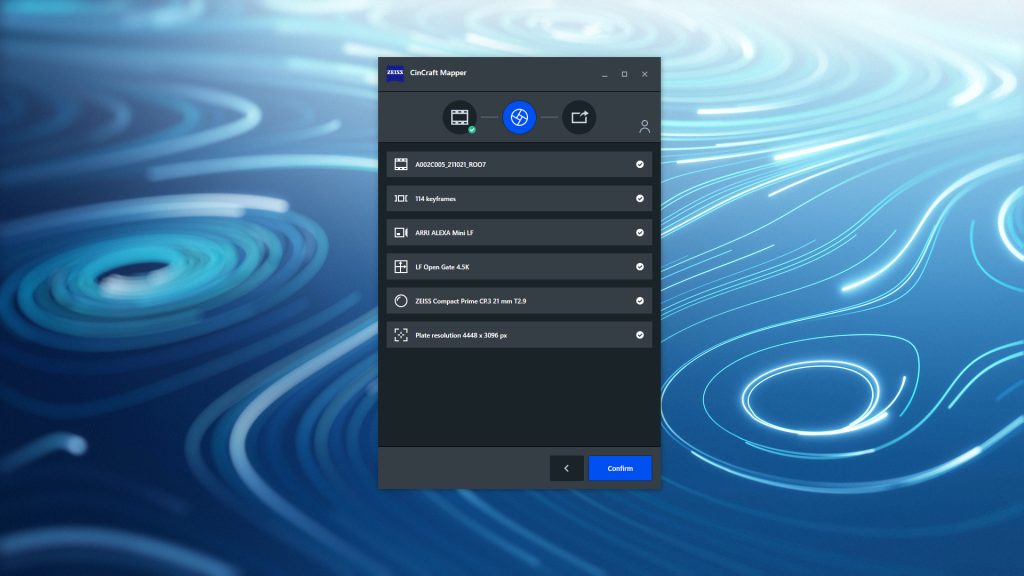

ZEISS CinCraft Mapper

- Perfect for compositing and matchmoving in post-production

- When paired with compatible cameras, lens metadata can be saved direct to RAW file and converted to EXR sequence

- EXR Sequence uploaded to Mapper platform

- Frame accurate ST and Multiply maps provided at the click of a button, compatible with industry leading VFX software

- Distortion data also available on a number of older ZEISS lenses, including Master Primes and Ultra Primes

Find out more about eXtended Data: www.zeiss.ly/XD

Register for free and test the CinCraft Mapper at: www.Zeiss.ly/Mapper

–

Words: Adrian Pennington

This article is paid for by ZEISS